Scientific machine learning (SciML) is a field of AI for Science that aims to enhance and accelerate computer simulations and modeling for physical phenomena using AI. In particular, deep geometric mechanics integrates insights from analytical mechanics, differential geometry, and scientific computing into deep learning. This allows for automatic modeling of physical phenomena (such as wave propagation and crystal growth) whose detailed mechanisms and equations are not yet understood, enabling fast and accurate computer simulations and the discovery of physical laws from data.

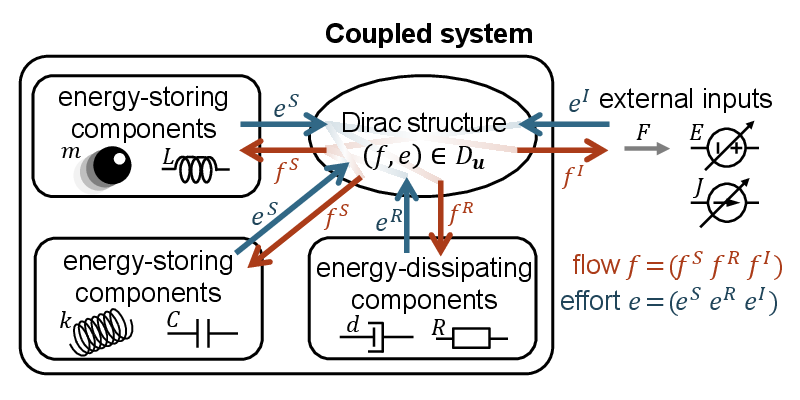

Most deep learning-based physics models focus only on mechanical systems and treat systems as monolithic. These limitations reduce their applicability to electrical and hydraulic systems, and to coupled systems. To address these limitations, we propose Poisson-Dirac Neural Networks (PoDiNNs), which is based on the Dirac structure that unifies the port-Hamiltonian and Poisson formulations from geometric mechanics. This framework enables a unified representation of various dynamical systems across multiple domains as well as their interactions and degeneracies arising from couplings.

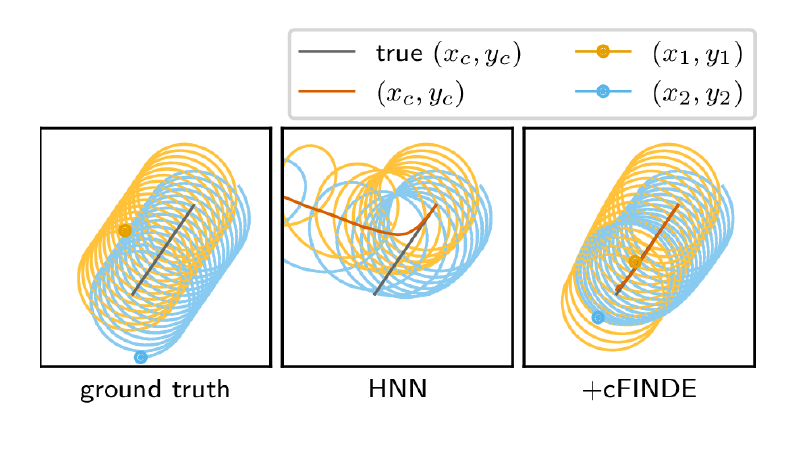

When modeling physical phenomena from data using deep learning, many studies have been conducted to improve modeling performance by incorporating known physical laws, such as Hamiltonian neural networks. However, when learning unknown dynamical systems with neural networks, it is often unclear what methods to use since the associated laws are also unknown. Therefore, we proposed FINDE, a method that applies projection methods to discover various types of invariants from data and ensure high-precision time series predictions.

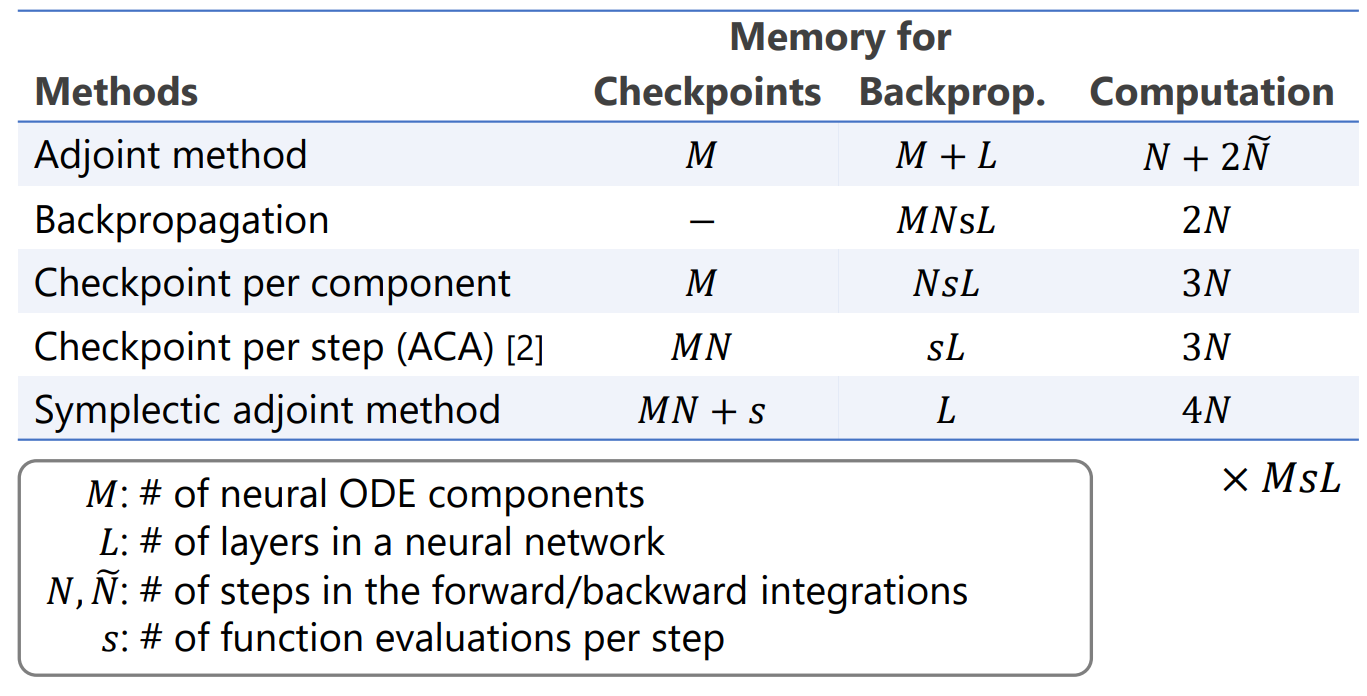

Neural ODEs, which learn ordinary differential equations (ODEs) with neural networks, can model continuous-time dynamical systems and continuous probability density functions with high accuracy. However, because the same network must be applied repeatedly, training with backpropagation requires a large memory footprint. Although the adjoint method is memory efficient, it requires considerable computation cost to suppress numerical errors. In this study, we combined the symplectic numerical integration method used in the adjoint method with an appropriate checkpointing method to achieve both memory efficiency and fast gradient computation.

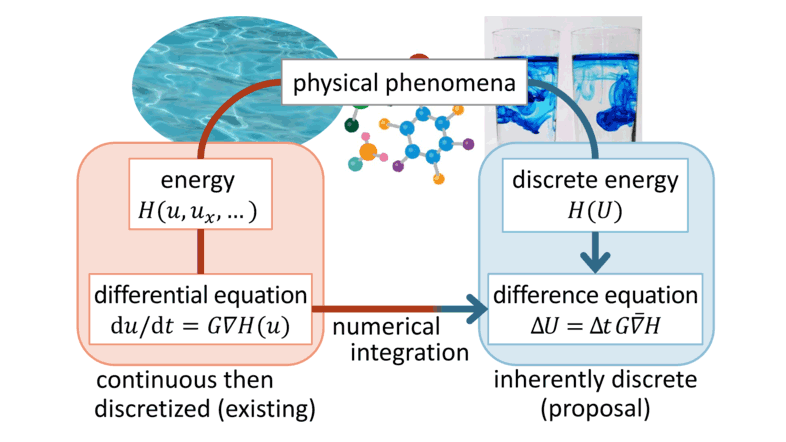

The governing equations of physical phenomena are often defined in continuous time, but computational simulations must be performed in discrete time, leading to discretization errors that break physical laws such as energy conservation and dissipation, resulting in unreliable outcomes. To avoid this, the discrete gradient has been studied, but it requires manual equation transformation, making it difficult to apply to machine learning. This study proposed the automatic discrete differentiation algorithm, enabling the application of discrete gradient methods to deep learning. This allows for simulations that strictly preserve energy conservation and dissipation laws while learning the target dynamical system from data using deep learning.

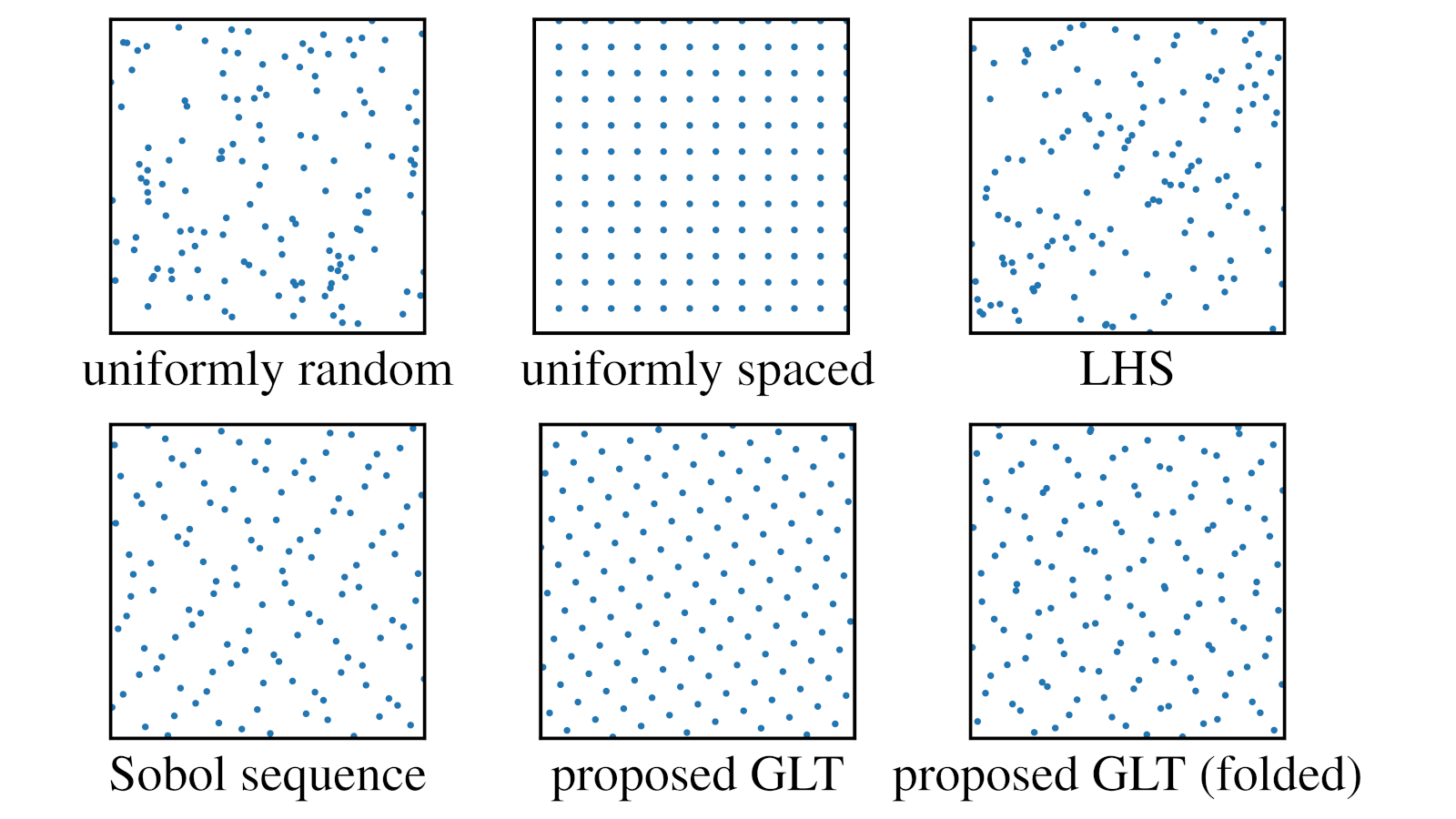

Physics-informed neural networks (PINNs) use neural networks as basis functions to represent solutions of partial differential equations (PDEs), allowing for flexible solution representation and easy extension to inverse problems. In PINNs, the network is trained to satisfy the PDE at a finite number of evaluation points, so the choice of evaluation points directly affects learning efficiency and accuracy. This study applied the good lattice point method based on number theoretic approaches to PINNs and proposed several techniques to meet its prerequisites. As a result, learning was accelerated by 2 to 7 times for low-dimensional PDEs.